Introduction

Building new systems is about more than new hardware and software – it may also include changes to processes, jobs, skills, management and organisations.

The objectives of this chapter are to:

- Describe the relationship between system development and organisational change

- Explain business process re-engineering and process improvement

- Briefly describe Total Quality, Six Sigma and Benchmarking

- Provide an overview of system development

- Describe Systems Investigation; Systems Analysis; Systems design; Programming; Testing; Implementation; Production and Maintenance

- Describe various system development approaches

- Traditional System Development Life Cycle; Prototyping; End-User Development; Application Software Packages; Outsourcing

- Provide an overview of some contemporary approaches to application development

- JAD: RAD; Component-based Development and Web Services

BUILDING SYSTEMS AND PLANNED ORGANISATIONAL CHANGE

The process of building and implementing a new information system will bring about change in the organisation. The introduction of new information systems has a far greater impact that the purchase and deployment of new pieces of technology. It also includes changes to business processes, jobs, skills and even the structure of the organisation. System builders must consider how the nature of work and business processes will change.

System Development and Organisational Change

According to Laudon & Laudon (2010) information technology can enable the following four kinds of organisational change;

- Automation: this involves using computers to speed up the performance of existing tasks by eliminating the need for manual activity. This approach to organisational change may release staff to other jobs, reduce the number of employees needed, or enable the organisation to process more transactions.

- Rationalisation of procedures refers to the streamlining of standard operating procedures and eliminating some tasks in a process removing any blockages.

- Business process reengineering refers to the radical redesign of business processes. It can involve combining tasks in a process to cut waste and eliminating repetitive, labourintensive tasks in order to improve cost and quality and to maximise the benefits of information technology.

- A paradigm shift is a radical change in the business and the organisation. The strategy of the business can be changed and sometimes even the business the company is in.

Business Process Re-engineering

Business process re-engineering (BPR) is a management practice that aims to improve the efficiency of the business processes. Reengineering is a fundamental rethinking and radical redesign of business processes to achieve major improvements in performance, cost, quality, speed and service.

Companies should next identify a few core business processes to be redesigned, focusing on those with the greatest potential return. Identifying the business processes with the highest priority includes looking at those which are crucial to the business strategy of the company and those where there are already issues and problems.

After identification of the core processes for re-designing, the business process itself must be analysed in terms of its inputs and outputs, flow of products or services, activities, resources etc. The performance of the existing processes must be measured and used as a baseline. Business processes are typically measured along the following dimensions:

- Process cost

- Process time

- Process quality

- Process flexibility

Rather than designing the process in isolation and then looking at how Information technology can support it, information technology should be allowed to influence process design from the start. Once a business process is understood, a variety of techniques or principles can be used to improve it, such as:

- Replace sequential steps in the process with parallel steps

- Enrich jobs by enhancing decision authority and concentrating information

- Enable information sharing throughout to all participants

- Eliminate delays

- Transform batch processing and decision making into continuous flow processes

- Automate decision tasks where possible

Following these steps does not guarantee that reengineering will always be successful because the required organisational changes are often very difficult to manage. Therefore companies will also need to develop a change management strategy to deal with the resistance to changes that is likely to occur among the people impacted by the planned changes.

New information system software provides businesses with new tools to support process redesign. Work flow management offers the opportunity to streamline procedures for companies whose primary business was traditionally focused on processing paperwork. Instead of multiple people handling a single customer in serial fashion, work flow management software speeds up the process, by allowing several people to work on the electronic form of the document at the same time, and it can also decrease the total number of people who handle it.

Process Improvement

While business process re-engineering might be a once off effort that focuses on processes that need radical change, organisations have many business processes that must be constantly revised to keep the business competitive. Business process management and quality improvement programs provide opportunities for more incremental and ongoing types of business process change.

Business Process Management (BPM)

Business Process Management (BPM) is an effort to help organisation manage process change that are required in many areas of the business. Business process management (BPM) involves analysing every task in a business and helping firms continually optimise them. BPM includes work flow management, business process modelling, quality management, change management and standardising processes throughout the organisation. Every business should continually analyse how they accomplish each task and look for possible ways to improve them.

Total Quality Management

In addition to business process management, Total Quality Management (TQM) is used to make a series of continuous improvements rather than dramatic bursts of change. Many organisations are using TQM to make quality control the responsibility of all the people and functions within the organisation. Traditionally quality was the responsibility of the quality control department, whose job was to identify and remove mistakes after they had occurred. However trying to control mistakes after they had occurred is very difficult, as many quality defects are embedded in the finished product and are essentially hidden, making them more difficult to discover. Despite an organisations best efforts certain mistakes remained hidden and undetected. The Total Quality Management (TQM) approach emphasised preventing mistakes rather than finding and correcting them. To achieve this goal the responsibility for quality is moved from the quality control department to everyone in the organisation.

Deming and Juran who are considered to be the fathers of TQM were both Americans but it was the Japanese in the 1950s that embraced their ideas. Deming’s view was that by improving quality, costs would be reduced due to less reworking, fewer mistakes, fewer delays and better use of time. He believed this approach would lead to greater productivity and enable the company to gain a larger share of the market because of lower costs and higher quality. As maintaining quality became embedded in the organisation over time it would cost less.

The successful application of the TQM concepts by Japanese companies in the 1970s and there subsequent success in world markets led to organisations across the world taking on board total quality initiatives in an attempt to cope with increased competition.

TQM is an all-encompassing approach to managing quality where the organisation tries to achieve total quality products/services through the involvement of the entire organisation, with customer satisfaction as the driving force.

Six Sigma

Six Sigma is another improvement approach that stresses quality by designating a set of methodologies and technologies for improving quality and reducing costs.

Six Sigma is a set of practices originally developed by Motorola to systematically improve processes by eliminating defects. A defect is defined as nonconformity of a product or service to its specifications.

While the particulars of the methodology were originally formulated by Bill Smith at Motorola in 1986, Six Sigma was heavily inspired by six preceding decades of quality improvement methodologies such as quality control, TQM, and Zero Defects. Like its predecessors, Six Sigma asserts the following:

- Continuous efforts to reduce variation in process outputs is fundamental to business success

- Manufacturing and business processes can be measured, analysed, improved and controlled

- Succeeding at achieving sustained quality improvement requires commitment from the entire organisation, particularly from top-level management

The term “Six Sigma” refers to the ability of highly capable processes to produce output within specification. In particular, processes that operate with Six Sigma quality produce products with defect levels below 3.4 defects per (one) million opportunities (DPMO). Six Sigma’s implicit goal is to improve all processes to this level of quality or better.

The basic methodology consists of the following five steps:

- Define the process improvement goals that are consistent with customer demands and business strategy.

- Measure the current process and use this for future comparison.

- Analyse to verify relationship between factors. Determine what the relationship is and attempt to ensure that all factors have been considered.

- Improve or optimise the process based upon the earlier analysis. In this phase, project teams seek the optimal solution and develop and test a plan of action for implementing and confirming the solution.

- Control to ensure that any variances are corrected before they result in defects. Set up pilot runs to establish process capability, move to full production and continuously measure the process.

Benchmarking

Benchmarking involves setting strict standards for products, services and other activities and then measuring performance against those standards. Companies may use external industry standards, standards set by competitors, internally generated standards or a combination of all three.

SYSTEM DEVELOPMENT

The set of activities that are involved in producing an information system are called system development. The activities involved in system development include:

- System Investigation (including feasibility study)

- Systems analysis

- Systems design

- Programming

- Testing

- Implementation (including conversion or changeover)

- Production and maintenance.

This phased approach to system development is referred to as the System Development Life Cycle (SDLC). A number of alternative software development approaches are described later in this chapter.

Note: The number of steps in traditional systems development might vary from one company to the next but most approaches have five common steps or phases: investigation, analysis, design, programming/testing, implementation and lastly maintenance and review.

System Investigation

The system investigation (sometimes referred to as system definition) is the first stage of SDLC. At this stage the business problem (or business opportunity) is investigated to define the problem, to identify why a new system is need and to define the objectives of the proposed system. The problem may relate to an existing system that is not able to handle the workload, is not working properly or is not capable of handling some new product or service. The system investigation stage will look at the feasibility of a system solution to the business problem. Feasibility Study

The systems investigation stage would include a feasibility study to determine whether the proposed solution is feasible or not. The feasibility is assessed from a number of perspectives:

- Financial feasibility: This involves investigating the costs and benefits of the proposed system. The aim is to establish whether or not the proposed system is a good investment and if the organisation can afford the expense. A number of different methods can be used to assess the cost-benefit of the different system proposals. The methods include breakeven analysis, return on investment calculations or time value of money calculations. Each method involves calculating the total tangible costs and benefits of a new system. Typical costs include development, new hardware and training. Typical benefits include savings from improved efficiency, improved stock control and reduced staffing costs. It may also be necessary to establish intangible costs and benefits. Even though these can be difficult to estimate, they can be important indicators of a system’s feasibility. An example of an intangible costs might be the disruption to the organisation during and for a short time after the implementing of a new ERP system, while an improved image of the company as a result of launching a new e-commerce site could be an intangible benefit

- Technical feasibility: This relates to the ability of the organisation to construct and implement the particular system in terms of expertise and knowledge of the technology involved. It is important to assess the IT departments’ experience and skills in relation to systems development and the software and hardware being used. Questions to be addressed include; is the technology needed by the system available and does the organisational have the expertise available to handle such technology.

- Organisational feasibility: This involves investigating how the new system or changes to the existing system will support the current and future business strategy, plans and objectives.

- Operational feasibility: This involves examining the ability of the organisation to accept and use the new system. The issues that should be examined under operational feasibility include company culture and workforce skill and possible existing agreements with unions that could be impacted upon.

- Schedule feasibility: This looks at the time frame of the proposed development. For example is there a critical date that needs to be met for the systems implementation and can it be realistically achieved.

Typically several alternative solutions will be investigated. The feasibility of each solution is assessed and a report is written identifying the costs and benefits and advantages and disadvantages of each. It is then up to management or a steering group to determine which proposed solution represents the best alternative. A typical feasibility report might contain the following:

- Project background and objectives of the proposed system

- A description of the current system and problems experienced with it

- An outline of a number of possible solutions and an evaluation of the feasibility of each

- A recommendation for a particular solution.

After the feasibility report is presented to the steering committee or senior management (if no steering committee exists) then a decision is made on whether or not to proceed with the system development project. If the decision is to proceed then the systems analysis phase begins.

System Analysis

Once the system development has been approved then the systems analysis stage can begin. Systems analysis is the examination of the problem that the organisation is trying to solve with an information system. This stage involves defining the problem in more detail, identifying its causes, specifying solutions, and identifying the information requirements that must be satisfied by a system solution.

To understand the business problem the analysis must gain an understanding of the various processes. The analyst examines documents and procedures, observes end users operating the system and interviews key users of the existing systems to identify the problem areas and objectives the solution should aim to achieve. The solution could involve building a new information system or making changes to an existing system.

Capturing Information Requirements

Information requirements capture involves identifying what information is needed, who needs it, where, when and in what formats. The requirements define the objectives of the new or modified system and contain a detailed description of the functions the new system must perform. Gathering information requirements is a difficult task of the systems analyst and faulty requirements capture and analysis can lead to system failure and high systems development costs as major changes may be needed to the systems after implementation.

Information requirements are difficult to determine because business functions can be very complex and are often poorly defined. Processes may vary from individual to individual, and users may even disagree on what the process is or how things should be done. Defining information requirements is a demanding job that can require a large amount of research by the analyst. A number of tools are used by the analyst to document the existing and proposed systems including Data Flow Diagrams (DFDs).

System Analysis Tools

DATA FLOW DIAGRAMS (DFD’S)

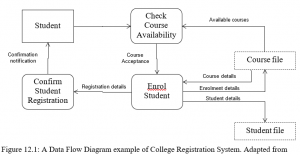

A data flow diagram (DFD) is a graphical method of showing the flow of data through a system (see Figure 12.1). It is used to show processes and data flows. Although data flow diagrams can be used in the design process, they are also useful during the analysis phased to enable users and analysts to gain a shared understanding of the system. Data flow diagrams enable the systems analyst to document systems using what is referred to as a structured approach to systems development. Only four symbols are needed for data flow diagrams: entity, process, data flow and data storage.

- Entity Symbol: This is the source or destination of a data or information flow. An entity can be a person, a group of people or even a place.

- Process Symbol: Each process symbol contains a description of a function to be performed. Typical processes include Enter Data, Verify Data and Update Record.

- Data Flow: The flow line indicates the flow of data or information.

- Data Store: These symbols identify storage locations for data, which could be a file or database.

Because the notation is simple, users easily understand it. Users can check the DFDs for problems or inaccuracies so that they can be changed before other design work begins. Data flow diagrams allow the analyst to examine the data that enters a process and the data that leaves the process and to see how it has been changed. This can help the analyst gain a fuller understanding of the process.

Data flow diagrams are part of the system documentation. They show the logical view of the system. They show what is happening, not how that event occurs.

Data flow diagrams describe the system in a top-down approach. High-level DFDs can be drawn to give a high-level, summarised view of the system. More detailed DFDs can be drawn for particular parts of the system, where more detail is required.

Figure 12.1: A Data Flow Diagram example of College Registration System. Adapted from

Laudon & Laudon. 2010

The advantage of using data flow diagrams is that they can be used to show a very general, high-level view of the system or a very detailed view of a part of the system using the same tools. Anyone can view the overall system and then drill down through the DFD diagrams to lower levels of the system.

DECISION TABLES

A decision table is a tabular format for recording logical decisions that involves specifying a set of conditions and the corresponding actions. Decision tables are useful in cases that involve a series of interrelated decisions as they help to ensure that no alternatives are overlooked. A decision table can provide a greater level of detail about a process that using a process diagram on its own.

DECISION TREES

A decision tree is an alternative approach for analysing decisions whereby decision options are represented as branches on a tree-like diagram. They are particularly usefully where a lot of complex decision choices needs to be taken into account. They provide an effective structure in which alternative decisions and the implications of taking those decisions can be represented. Decision trees can be used to define what the decisions are, their sequence and outcomes.

Systems Design

The purpose of the systems design phase is to show how the system will fulfil the information requirements specified in the system analysis phase. The system designer draws up specifications that will deliver the functionality identified during the systems analysis phase. The systems design specification should contain details of system inputs, outputs and interfaces. It should also contain specifications for hardware, software, databases, telecommunications, networks, processes and people.

Walkthrough

A walkthrough is a review by a small group of people of a system development project usually presented by the creator of the document or item being reviewed. Walkthroughs can be used to review a data-flow diagram, a structure chart, form designs, systems screens etc. Walkthroughs generally include specification walkthroughs, design walkthroughs and test walkthroughs.

Logical design

This involves laying out the parts of the system and their relationship to each other as they would appear to users. The emphasis on what the system will provide in terms of functionality rather than how the system will be implemented physically. The logical design will include inputs, outputs, processing, controls etc.

Physical Design

The physical system design specifies how the system will perform its functions and will include physical specifications such as design of hardware (computers, routers etc), telecommunications, etc.

Data Driven and Process Driven Development

The term process-driven refers to an emphasis on the functions or activities of an enterprise that the system is being designed for. By improving how a process is performed, it is hoped that the system becomes more efficient. Techniques used in such a methodology concentrate on describing the processes and the input-output flows. The processes are mapped using process flow diagrams to gain a full understanding of the process. During the design phase the process is changed to take advantage of the system functionality being developed.

Data-driven development focuses on modelling the data in a system because data is less likely to change than processes. Data-driven methodologies describe the system in terms of entities, attributes and relationships. Entities are things of interest to the system in the real world, such as customers, products etc. Attributes are properties that describe the entity such as customer name, address or product description. Relationships are ways that the entities interact. Techniques used here are similar to those used in entity-relationship modelling (discussed in Chapter 6). Data-driven development techniques are often associated with the development of database systems.

Programming

Programming translates the design specification into software (program code) that provides instructions for the computer. Many organisations no longer do their own programming but instead outsource the building of the systems to an external software development company. They also have the option to purchase of-the-shelf software applications that meets their specific requirements from an external software vendor.

Testing

Testing is critical to the success of a system because it checks that the system will produce the expected results under specific conditions. The testing will find any errors (bugs) in the computer code. Comprehensive testing can be a time consuming and expensive process. However the cost of implementing a system with underlying errors could be far greater for the organisation. There are normally three stages of information system testing: unit testing, system testing and acceptance testing.

- Unit testing (program testing) involves testing the smallest piece of testable software in the application, usually the individual programs. The purpose of this testing is to locate errors in the code so that they can be corrected. Unit testing is normally carried out by the programmers.

- System testing which is normally carried out on a complete integrate system, involves testing the functionality of the information system as a whole to determine whether program modules are interacting as planned and to establish that the system meets its specified requirements.

- Acceptance testing is normally the final stage of testing performed on a system. Its purpose is to establish that the requirements defined in the analysis and design stages have been met. Acceptance testing is normally carried out by the end users of the system.

Other types of testing include Regression testing which is normally carried out after changes have been made to existing code. The aim of regression testing is to determine whether the changes made to the code interfere with anything that worked prior to the change. Performance testing is testing executed to determine how a system performs in terms of responsiveness and consistency under a particular load.

Test Plan

Before testing is carried out a test plan must be created. The test plan details the approach that will be used to ensure that the system meets its design specifications and requirements for the tests to be carried out. A set of tests will be prepared which are generally derived for the requirements. This approach to testing is called requirements-based testing. The individuals who will carry out the testing must also be decided.

As the testing progresses, the testers formally document the results of each test, and provide error (defect) reports to the system developers. The developers correct the defects and the systems are re-tested.

Implementation

As part of the system implementation phase new hardware may need to be acquired and if not already developed in house software will also need to be acquired. A critical part of the implementation phase is the data conversion or changeover.

System conversion or changeover is the process involved in changing from the old system to the new system. There are four main approaches to conversion: parallel running, direct cutover, the pilot study and phased approach.

- Direct Cutover: The direct cutover or Big Bang approach involves fully replacing the old system with a new system in one move. This is generally the fastest and cheapest method of conversion, and in many situations it may be the only practical approach. However it is also the most risky method as there is no fallback if a serious problem is discovered with the new system after it has gone live.

- Parallel Running: The parallel approach involves running the old system and the new system together for a period until there is reassurance that the new system is operating correctly. This is the safest approach because if serious errors are discovered in the new system, users can revert back to the old system until the problems are resolved. However this approach is very expensive in terms of effort and resources required to update two systems at the same time with every transaction.

- Pilot Study: The pilot study involves the new system going live in one location only or within just one part of the organisation initially. When the system is working correctly in the pilot area, it is then rolled out to the remainder of the organisation. In many cases this is not a practical approach as the new system must go live across the whole organisation simultaneously.

- Phased Approach: The phased approach introduces the new system in stages which could be one module at a time or part of the functionality in stages. This approach reduces the risk inherent in a direct changeover of the full system in one go.

Before the new system is implemented, end users are normally trained to use the new system. Documentation must be prepared on the operation and use of the new system and this will be used during training and in normal operations. Inadequate training and poor or non-existent documentation can be major factors in contributing to system failure.

Production and Maintenance

Production is the operation of the system after it has been implemented and the conversion is totally finished. During production the system will be reviewed by both users and IT specialists to determine how well it has fulfilled the original requirements, if there are any bugs in the systems, and to decide whether any changes are needed. A formal post implementation review may be carried out.

Maintenance is the carrying out of modifications to a production system to correct errors, meet new requirements, and improve efficiency of the system. The quality of the systems analysis and design and testing phases will impact the level of system maintenance required. For example if the requirement are not fully captured or understood at the analysis stage, then the resulting system will not meet user requirement and may need significant changes during the maintenance phase.

Modelling and Designing Systems – Structured and Object-Oriented Approaches There are a number of alternative methodologies for modelling and designing systems. Some such as data flow diagrams have already been described. Structured methodologies and object oriented development are two common methods.

Structured Methodologies

Structured methods are generally step by step, with each step building on the previous one. Traditionally, systems have been structured in an organised way. The methods used design and build systems begins at the top and then moving on to the lower levels of detail, always ensuring that the data and processes were kept separate. The designers can use data flow diagrams (DFDs) to model how the data moves through the system and the relationships between the processes. Data flow diagrams (DFD) are discussed earlier in this chapter.

Two other structured methodologies are process specifications and structure charts. The aim of process specifications is to describe the processes within the data flow diagrams. They detail the logic for each process. The structure chart shows each level of design in a topdown approach; its relationship to the other levels and its place in the overall design of the system. The structured design approach first looks at the main function of a system and then splits this function into sub-functions. It then further breaks each sub-function down until the lowest level of detail has been reached.

The Object-Oriented Approach to System Development

The traditional structured methodology focuses on what the new system is intended to do and then develops the procedures and data to do it. Object-oriented development de-emphasises system procedures and instead creates a model of a system composed of individual objects that combine data and procedures. The objects are independent of any specific system. These objects can then be placed into any system being built that needs to make use of the data and functions. In addition, in traditional structured methodologies all work is done serially, with work on each phase beginning only when the previous phase is completed. Object-oriented development theoretically allows simultaneous work on design and programming. These systems usually are easier to build and more flexible. Moreover, any objects created this way are reusable for other programs.

Computer Aide Software Engineering (CASE)

Computer-Aided Software Engineering is a development approach that provides tools to automate many of the tasks involved in software development. It includes software tools to assist in systems planning, analysis, designing, programming testing, operation and maintenance. In fact CASE tools can help automate the later stages of the SDLC – programming, testing and operation. For example if data flow diagrams were produced and stored within the CASE tools along with a data dictionary (data definitions) the CASE tools could be used to automatically create the program code.

Case tools can provide a number of advantages in system development, such as speed up the development process, help the analyst create a full set of requirements specifications, help produce systems that better match user requirements and ensure system documentation is provided.

ALTERNATIVE APPROACHES TO DEVELOPING AND ACQUIRING SYSTEMS

A number of different system-building approaches have been developed. The organisation also has the option of outsourcing, developing or purchasing ready-made application software packages.

This section describes the following approaches:

- Traditional Systems Life Cycle

- Prototyping

- End-User Development

- Application Software Packages

- Outsourcing

Traditional Systems Life Cycle

The traditional systems life cycle (also referred to as the system development lifecycle (SDLC)) is a formal methodology for managing the development of systems and is still the primary methodology for medium and large projects. The overall development process is divided into distinct stages or phases. The stages are usually gone through sequentially with formal “sign-off” agreements among end users and the system specialists at the end of each stage. This ensures that each stage has been completed. The approach is slow, expensive, inflexible and may not be appropriate for many small systems.

The systems life cycle consists of systems analysis, systems design, programming, testing, conversion, and production and maintenance. These stages are outlined earlier in this chapter.

Advantages of the SDLC Approach

The advantages of using this method for building information systems include; it is highly structured and brings a formality to requirements collection and specifications of the system. It is suitable for building large complex systems and where tight control of the development process is required.

Disadvantages of the SDLC Approach

The disadvantages include; it is very costly and time consuming, it is inflexible and discourages change to the requirements during the latter stages of the cycle. It is not suited to situations where requirements are difficult to define.

Prototyping

Information system prototyping is an interactive system design methodology that builds a model prototype of a system as a means of determining information requirements. Prototyping involves defining an initial set of user requirements and building a prototype system; then improving upon the system in a series of iterations based on feedback from the end users. An initial model of a system or important parts of the system is built rapidly for users to experiment with. The prototype is modified and refined until it conforms precisely to what users want. Information requirements and design are determined as users interact with and assess the prototype.

The steps in prototyping include identifying the users basic requirements; developing a working prototype of the system outlined in the basic requirements, using the prototype, and revising and enhancing the prototype based on the user’s feedback. Laudon and Laudon (2010) suggest a four step prototyping process, where the steps are repeated many times if necessary. The steps in prototyping are summarised as follows:

Step 1: Identify the user’s basic requirements.

Step 2: Develop an initial prototype.

Step 3 Use the prototype.

Step 4: Revise and enhance the prototype.

The users check that the prototype meets their needs. If it does not meet their needs the prototype is revised. The third and fourth steps are repeated until users are satisfied with the prototype. The process of repeating the steps to build a system over and over again is referred to as an iterative process. Prototyping is best suited for smaller applications. Large systems with complex processing may only be able to have limited features prototyped such as screen inputs and outputs.

Benefits of Prototyping

- Prototyping is very useful for determining unclear requirements and where the design solution is unclear.

- Prototyping is especially helpful for designing end-user interfaces (screens and reports).

- End user involvement in the development process means that the systems are more likely to meet end user requirements.

- Prototyping can help reduce development costs by capturing requirements more accurately at an earlier stage in the development process.

Limitations of Prototyping

- Because prototypes can be built rapidly, documentation and testing may be minimal or not completed.

- It can result in poorly designed systems that are not scalable to handle large data volumes.

- Prototyping can result in a large number of iterations that can end up consuming the time that it was supposed to save.

- Problem can also arise when the prototype is adopted as the production version of the system.

End-User Development

End-user development refers to the development of information systems by end users without involvement of systems analysts or programmers. End user can utilise a number of userfriendly software tools to create basic but functional systems. Key tools used in end-user development include fourth generation languages.

FOURTH-GENERATION LANGUAGES

Fourth-generation languages (4GLs) are sophisticated languages, which enable end-users to perform programming tasks with little or no professional programmer assistance. They can also be used by professional programmers. The 4GLs are essentially shorthand programming languages that simplify the task writing programs typically reducing the amount of code required in a program over what would be needed if a third-generation language was used. Laudon & Laudon (2010) identifies a number of categories of fourth-generation language tools which are:

- Query languages: This is a high-level language that is used to retrieve data from database and file. It can be used for ad-hoc queering for information.

- Report generators: These enable the extracting data from files or databases to create reports.

- Graphics languages: These are used to display data from files or databases in graphic format.

- Application generators: These are modules that can be used to generate programming code for input, processing, update and reporting once the users provide specifications for an application.

- Very high-level programming languages: These can be used to perform coding with far fewer instructions than conventional programming languages.

- General purpose software tools: These include software packages such as word processing, data management, graphics, desktop publishing and spreadsheet software that can be utilised by end user developers to build basic systems.

Benefits of End-user Developments

The benefits of end user development include:

- Delivery of systems quickly and overcoming backlog in formal system development

- User requirement are better understood

- It is suited to developing low-transaction systems

- Lower cost development option.

Limitations of End-user Developments The limitations of end user development include:

- Not suited to large complex systems

- Quality and testing standards are not always followed

- Poor documentation

- Can lead to data duplication and uncontrolled data

- System security is often poor or non existent

- Systems may lack basic data backup recovery capabilities

- These systems are not scalable and can suffer from poor performance as number of users or transactions grows.

Application Software Packages

An application software package is a set of prewritten, pre-coded application software programs that are available for sale or lease. Packages range from very simple programs to very large and complex systems such as ERP systems. Packages are normally used when functions are common to many companies and when resources for in-house development are not available. Examples of application packages include payroll packages, accounting packages, inventory control applications and supply chain applications.

Advantages of Software Packages

Software packages provide several advantages:

- They are generally available for use straight away

- Programs are pre-tested and will generally have few errors, cutting down testing time and technical problems

- They are generally established, well-proven products that incorporate best practices

- The applications will have documentation and the vendor will normally provide training for the application

- The vendor often installs or assists in the installation of the package

- Periodic enhancement or updates are supplied by the vendor

- The vendors have support staff which reduces the need for individual organisations to have in-house expertise

- Packages are generally cheaper for the organisation than developing custom-built systems

Disadvantages of Software Packages

There are a number of disadvantages associated with software packages:

- There can be high conversion costs when moving from a custom legacy system to an offshelf application package.

- They are not always an optimal solution and as a result packages may require widespread and expensive customisation to meet unique requirements

- Customisation is possible but can be costly, time-consuming and risky

Outsourcing

Outsourcing of information systems is the process of subcontracting the development and sometimes the operation of information systems to a third party company who provide these services. The work is done by the vendor rather than the organisation’s internal information systems staff. Outsourcing is an option often considered when the cost of information systems technology has risen too high. Outsourcing is seen as a way to control costs or to develop applications when the firm lacks its own technology resources to do this work.

Benefits of Outsourcing

Organisations can realise the following benefits from outsourcing:

- Cost savings: Outsourcing can lower the overall cost of the service to the business. Increasingly using outsourcing to low cost economies through off-shore outsourcing.

- Improve quality: By contracting out the development to specialist developer the quality of the systems is improved.

- Knowledge: Outsourcing provides access to wider experience and knowledge.

- Contract: Services will be provided to a legally binding contract with financial penalties and legal redress. This is not the case with internal services.

- Operational expertise: Access to operational best practice.

- Staffing issues: Outsourcing provides access to a larger talent pool in a cost effect way as the capacity management becomes the responsibility of the supplier and the cost of any excess capacity is borne by them.

- Catalyst for change: An organisation can use an outsourcing agreement as a catalyst for change that it would not achieve on its own. It can bring new innovations that will drive change in organisations.

- Reduce time to market: The speeding of system development that are required to support new product and service can reduce the time for these products and services.

- Risk management: By working closely with the outsourcer on risk management many types of risks can be lessened.

- Time zone: In the case of off-shore outsourcing work can be done in different time zones thus speeding up the development process and keeping costs down.

Risks Associated with Outsourcing of Application Systems Development

There are a number of risks associated with outsourcing which must be understood and evaluated to establish if outsourcing is appropriate in the particular situation. The risks include:

- Many companies underestimate the costs associated with outsourcing.

- Outsourcing introduces new demands and costs such as RFI costs, travel expenses, negotiating contracts and project management.

- Issues can arise if the vendor doesn’t fully understand the business.

- Offshore outsourcing can introduce issues related to cultural differences.

- If requirements are not fully documented or understood, this can lead to substantial costs if changes are needed late in the development project.

- There is also the potential risk of the vendor going out of business at some stage after the application is in operation, leaving the application unsupported.

Factors that Influence which Development Approach to Adopt

The approach that is adopted to develop the system will depend on certain factors. Some examples of these factors are:

Knowledge and experience of the developers

If the analysts and designers have a good knowledge of the business sector in which the system is being implemented they will be in a better position to adopt the traditional (SDLC) approach. The input of users in this case is less critical that in a situation where the analysts and designers are unfamiliar with the business.

Nature of system being developed

Large and complex systems may require the iterative approach that prototyping uses to gradually extract and refine requirements. However large and complex systems also require tight control of the development process which would favour the use of the SDLC. Prototyping may be suitable for parts of the systems stuck at the user interface.

Clarity of the system requirements

If requirements are clear, well defined and understood then there is less scope for misunderstanding. Therefore the traditional approach may be suitable. If requirements are vague and unclear then the prototyping approach is more suitable as it ensures that the system requirements are clearly understood and the system is developed to meet these requirements.

Experience of the user community

If users are experienced with computer systems they may be able to identify and express requirements more clearly, thus allowing the traditional approach or end-user development approaches to be used. If users are inexperienced with computer systems, it is sensible to show users a version of the system as early as possible in the development process to aid the understanding of requirements. Prototyping is therefore suitable for inexperienced users.

Timescale involved

Prototyping can be used in development projects with short timescales. If managed correctly, prototyping can help to ensure speedy system development. If the timescale is short, prototyping may be more suitable than the traditional approach. However if not tightly managed prototyping can also lead to overruns as it can get bogged down in cycles of review and changes. If the user is concerned about overruns then the traditional approach may be better.

CONTEMPORARY APPROACHES TO APPLICATION DEVELOPMENT

In the digital environment where the digital firm operates, organisations need to be able to change their technology capability very quickly to respond to new threats and opportunities. Companies are using shorter more informal development processes for many of their ecommerce and e-business applications. A number of techniques can be used to speed up the development process. These include joint application development (JAD), prototyping techniques such as rapid application development (RAD) and reusable standardised software components.

Joint Application Development (JAD)

This is an alternative approach to identifying and specifying requirements that was developed in the late 1970s. The JAD approach is a collaborative method that involves bringing together key users, managers and systems analysts for group sessions. Requirements are collected from a number of key people, at the same time allowing the analyst to see areas where there is agreement around requirements and areas of difference. JAD sessions are usually conducted away from where people normally work in specially designed conference rooms that are suitably equipped. The typical participants include:

- A JAD Session Leader

- Operational Level Users

- Managerial Level Users

- Systems Analysts

- Secretary – takes noted and records decisions

- Sponsor – A JAD sponsor would typically be a senior manager to highlight its importance When probably managed and planned, JADF sessions can speed up the analysis and design phases of a system development project.

Rapid Application Development (RAD)

Rapid Application Development (RAD) is an iterative approach to application development similar to prototyping. Capturing requirements, analysis, design and the building of system itself are developed in a sequence of refinements. The RAD approach was developed initially by James Martin in 1991.

The developers enhance and extend the initial version through multiple iterations until it is suitable for operational use. Unlike prototyping, RAD produces functional components of a final system rather than a limited scale version.

One of the big advantages of RAD is that it reduces the time it takes to build systems. Like other methods RAD does have disadvantages. The method’s speeded-up approach to systems analysis and design may result in systems with limited functionality and flexibility for change and often suffer quality issues.

Component-based Development and Web Services

Component-based development is a practice of developing reusable components that are commonly found in many software programs. For example, the graphic user interface can be created just once and then used in several applications or several parts of the same application. This approach saves development time and also creates functions that users have to learn only once and use multiple times.

Web services are reusable software components that enable one application to communicate with another (share data and services) without the need for custom program code. In addition to supporting the integration of systems, Web services can be used to build new information system applications. Web services create software components that are deliverable over the Internet and can be used to link an organisation’s systems to those of another organisation.

MANAGEMENT CHALLENGES

Challenges

Businesses today are required to build applications very quickly if they are to remain competitive. This is particularly true in relation to e-commerce and e-business applications. The new systems are more likely to be integrated with systems belonging to suppliers, customers and business partners.

Possible Solutions

Companies are turning to rapid application design, joint application design (JAD), and reusable software components to improve the systems development processes. Rapid application development (RAD) uses object-oriented software, prototyping, and fourthgeneration tools for quick creation of systems. Component-based development speeds up application development by providing software components that can be combined (and reused) to create large business applications.

Web services delivered over the Internet can by utilised for building new systems or integrating existing systems. Web services enable organisations to link their systems together independent of the technology platform the individual systems were created on.