1. The Questionnaire

A questionnaire can be defined as a group of printed questions which have been deliberately designed and structured to be used to gather information from respondents.

Advantages of using Questionnaire

1. They can be used to reach many people.

2. Save time, especially where they have been mailed to respondents.

3. Cost effective given they can be mailed and one can avoid using interviewers.

4. Questions are standardized and therefore the responses are likely to be the same.

5. Interviewer biases can be avoided when questionnaires are mailed.

6. They give a greater feeling of being anonymous and therefore encourage open responses to sensitive questions.

7. Effective in reaching distant locations where it is not practical to go there.

Disadvantages

1. Questionnaire mailed to respondents may not be returned.

2. The inability to control the context of questions answering and specifically the presence of other people who may fill the questionnaire.

3. A certain number of potential respondents, particularly the least educated maybe unable to respond to written questionnaires because of illiteracy and other difficulties in reading.

4. Written questionnaires do not allow the researchers to correct misunderstanding or answer questions that the respondent may have.

5. Source questionnaire may be returned half filled or unanswered.

Guidelines for Asking Questions

In the actual practice of social research-variables are usually operationalized by asking people questions as a way of getting data for analysis and interpretation. That is always the case in survey research, and such ‘self-report’ date are often collected in experiments,

field research, and other modes of observation. Sometimes the questions are asked by the interviewer, sometimes they are written down and given to respondents for completion (they are called administered questionnaire).

The term questionnaire suggests a collection of questions, but an examination of a typical questionnaire will probably reveal as many statements as questions. That is not without reason. Often, the researcher is interested in determining the extent to which

respondents hold a particular attitude or perspective. If you are able to summarize the attitude in a fairly brief statement, you will often present that statement and ask respondents whether they agree or disagree with it – Rensis Likert scale, – a format where

respondents are asked to strongly agree, agree, disagree, or strongly disagree, or perhaps strongly approve, approve, and so fourth.

Open-Ended and Closed-Ended Questions

Open-ended questions

The respondent in asked to provide his or her own answer to the questions eg. (‘What do you feel is the most important issue facing Kenya today?) and provided with a space to write in the answer (or be asked to report in verbally to an interviewer)

Closed-ended Questions

The respondents are asked to select an answer from among a list provided by the researcher. Closed-ended questions are very popular because they provide a greater conformity of responses and are more easily processed. Open-ended responses must be coded before they can be processed for computer analysis. This coding process often requires that the researcher interpret the meaning of responses, opening the possibility of misunderstanding and researcher bias. There is also a danger that some respondents will

give answers that are essentially irrelevant to the researcher’s intent. Closed-ended questions can often be transferred directly into computer format.

The chief shortcoming of closed-ended questions lies in the researcher’s structuring of responses. In asking about ‘the most important issue facing Kenya, for example, your check-list of issues might omit certain issues that respondents would have said were important.

In the construction of closed-ended questions, the response categories provided should be exhaustive. (They should include all the possible responses that might be expected) – (Please specify …………). Second, the answer categories must be mutually exclusive :

(In some cases you may wish to solicit multiple answers, but these may create difficulties in data processing and analysis later on).

Make Items Clear

Questionnaire items should be clear and unambiguous. Often you can become so deeply involved in the topic under examination that opinions and perspectives are clear to you but will not be clear to your respondents – many of whom have given little or no attention

to the topic. Or if you have only a superficial understanding of the topic, you may fail to specify the intent of your question sufficiently. The question ‘what do you think about the ‘proposed nuclear freeze?’ may evoke in the respondent a counter question: ‘which

nuclear freeze proposal?’ Questionnaire items should be precise so that the respondent knows exactly what the researcher wants an answer to.

Avoid Double-Barreled Questions

Frequently, researchers ask respondents a single answer to a combination of questions. That seems to happen most after when the researcher has personally identified with a complex question. For example, you might ask respondents to agree or disagree with a

statement. ‘The United States should abandon its space program and spend the money on domestic programs’. Although many people would unequivocally agree with the statement and others would unequivocally disagree, still others would be unable to answer. Some would want to abandon the space program and give the money back to the taxpayers. Other would want to continue the program but also put more money into domestic programs. These latter respondents could neither agree nor disagree without

misleading you.

Respondents must be Competent to Answer

In asking respondents to provide information, you should continually ask yourself whether they are able to do so reliably. In the study of child rearing, you might ask respondents to report the age at which they first talked back to their parents. Quite aside from the problem of defining talking back to parents, it is doubtful if most respondents would remember with any degree of accuracy.

One group of researchers examining the driving experience of teenagers insisted on asking an open-ended question concerning the number of miles driven since receiving a license. Although consultants argued that few drivers would be able to estimate such

information with any accuracy, the question was asked nonetheless. In response, some teenagers reported driving hundreds of thousands of miles.

Questions should be Relevant

When attitudes are requested on a topic that few respondents have though about or really cared about, the results are not likely to be very useful. This point is illustrated occasionally when you ask for responses relating to fictitious persons and issues. In a

potential poll conducted, respondents were asked whether they were familiar with each of 15 political figures in the community. As a methodological exercise, a name was made up: John Maina. In response, 9% of the respondents said they were familiar with him.

Of those respondents familiar with him, about half reported seeing him on television and reading about him in the newspapers.

When you obtain responses to fictitious issues, you can disregard those responses. But when the issue is real, you may have no way of telling which responses genuinely reflect attitudes and which reflect meaningless answers to irrelevant questions.

Short Items are best

In the interest of being unambiguous and precise and pointing to the relevance of an issue, the researcher is often led into long and complicated items. That should be avoided. Respondents are often unwilling to study an item in order to understand it. The

respondent should be able to read an item quickly, understand its intent, and select or provide an answer without difficulty. Provide clear, short items that will not be misinterpreted under those conditions.

Avoid Negative Items

The appearance of a negative item in a questionnaire paves way for early misinterpretation. Asked to agree or disagree with the statement. ‘The United States should not recognize Cuba’, a sizeable portion of the respondents will read over the word

not and answer on that basis. Thus, some will agree with the statement when they are in favor of recognition, and other will agree when they oppose it. Any you may never know which is which.

Avoid Biased Items and Terms

The meaning of some one’s response to a question depends in large part on the wording of the question that was asked. That is true of every question and answer. Some questions seem to encourage particular responses more than other questions. Questions that encourage respondents to answer in a particular way are called biased. Most researchers recognize the likely effect of a question that begins ‘don’t you agree with the president that….’ And no reputable researcher would use such an item. Unhappily the biasing

effect of items and terms is far subtler than this example suggests.

The mere identification of an attitude or position with a prestigious person or agency can bias responses. The item ‘do you agree or disagree with the recent supreme court decision that…’ would have similar effect. It does not mean that such wording will necessarily produce consensus or even a majority in support of the position identified with the prestigious person or agency, only that support would likely be increased over what would have been obtained without such identification.

Questionnaire items can be biased negatively as well as positively. ‘Do you agree or disagree with the position of Adolf Hitler when he stated that…..’ is an example. Since 1949, asking Americans questions about China has been tricky. Identifying the country as ‘China’ can still result in confusion between mainland China and Taiwan. Not all Americans recognize the official name: The People’s Republic of China. Referring to ‘Red China’ or Communist China’ evokes negative response from many respondents, though that might be desirable if your purpose were to study anti communist feelings.

Questionnaire Construction

Questionnaires are essential to and most directly associated with surveys research. They are also widely used in experiments, field research, and other data – collection activities. Thus questionnaires are used in connection with modes of observation in social research.

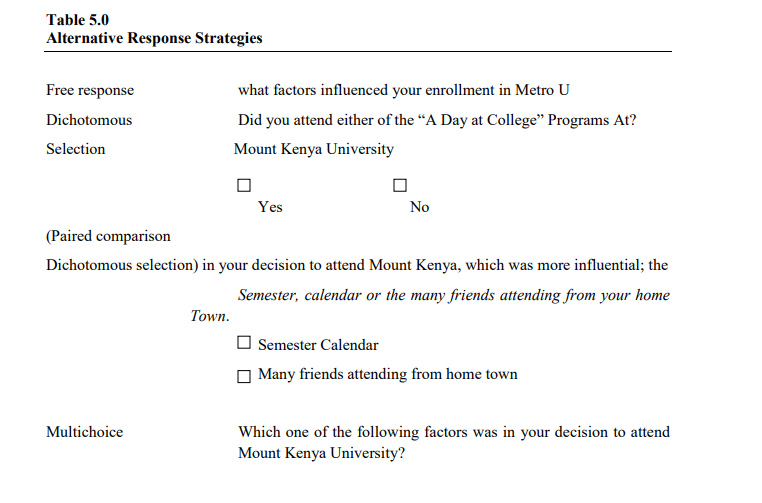

Response Strategies Illustrated

The characteristics of respondents, the nature of topic(s) being studied, the type of data needed, and your analysis plan dictate the response strategy. Example of the strategies described below are found in Table 10.1

Free-Response Questions: also known as open-ended questions, ask the respondent a question, and the interviewer pauses for the answer (which is unaided), or the respondent records his or her ideas in his or her own words in the space provided on a questionnaire.

Dichotomous Response Questions: A topic may present clearly dichotomous choices: something is a fact or it is not; a respondent can either recall or not recall information; a respondent attended or didn’t attend an event.

Multiple-Choice Questions: are appropriate where there are more than two alternatives or where we seek gradations of preference, interest, or agreement; the latter situation also calls for rating questions. While such questions offer more than one alternative answer,

they request the respondent to make a single choice. Multiple-choice questions can be efficient, but they also present unique design problems.

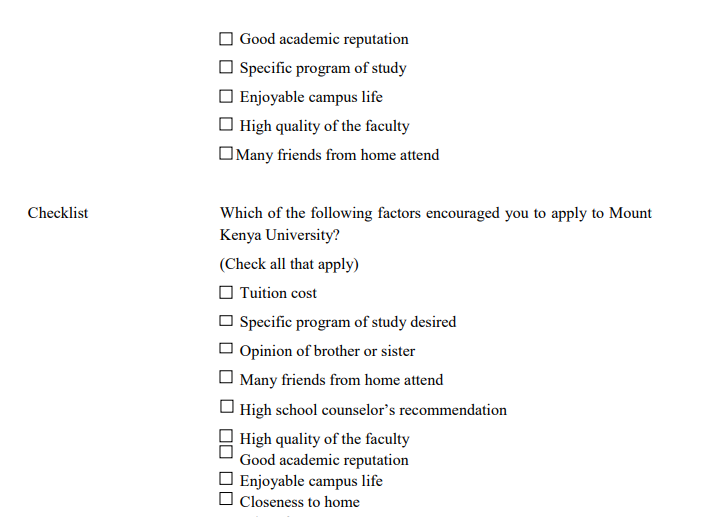

Checklist Strategies

When you want a respondent to give multiple responses to a single question, you will ask the question in one of three ways. If relative order is not important, the checklist is the logical choice. Questions like “Which of the following factors encouraged you to apply to Mount Kenya University (Check all that apply). Force the respondent to exercise a dichotomous response (yes, encouraged; no, didn’t encourage) to each factor presented. Of course you cold have asked for the same information as a series of dichotomous selection questions, one for each individual factor, but that would have been time-consuming. Checklists are more efficient. Checklists generate nominal data.

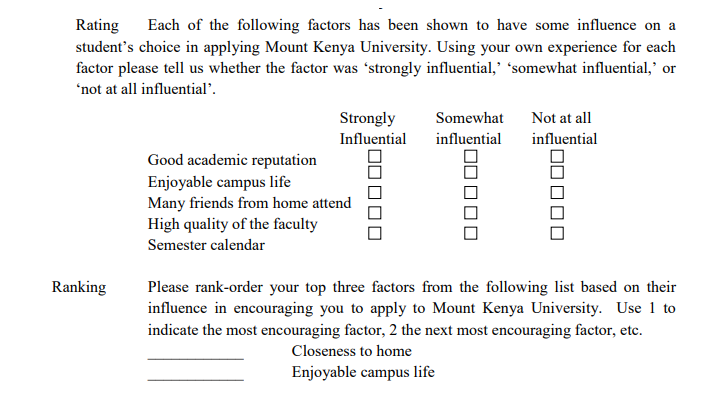

Rating Strategies

Rating questions ask the respondent to position each factor on a companion scale, verbal, numeric, or graphic. ‘Each of the following factors has been shown to have some influence on a student’s choice to apply to Mount Kenya University. Using your own experience, for each factor please tell us whether the factor was ‘strongly influential’, ‘somewhat influential’, or ‘not at all influential’. Generally, rating-scale structures generate ordinal data; some carefully crafted scales generate interval data.

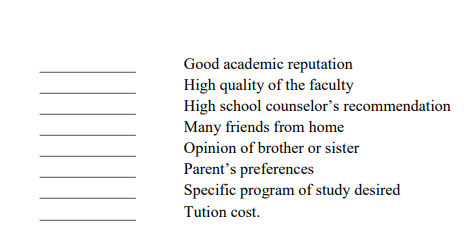

Ranking Strategies

When relative order of the alternatives is important, the ranking question is ideal. ‘Please rank order your top three factors from the following list based on their influence in encouraging you to apply to Mount Kenya University. Use 1 to indicate the most encouraging factor, 2 the next most encouraging factor, etc. The checklist strategy would provide the three factors of influence, but we would have no way of knowing the importance the respondent places on each factor. Even in a personal interview, the order in which the factors are mentioned is not a guarantee of influence. Ranking as a response strategy solves this problem.

Instructions

Instructions to the interviewer to respondent attempt to ensure that all respondents are treated equally, thus avoiding building error into the results. Two principles form the foundation for good instructions: clarity and courtesy. Instruction language needs to be unfailingly simple and polite.

Instruction topics include (1) how to terminate an interview when the respondent does not correctly answer the screen or filter questions, (2) How to conclude an interview when the respondent decides to discontinue, (3) Skip directions for moving between top sections of an instrument when movement is dependent on the answer to specific questions or when branched questions are used, and (4) Telling the respondent to a self-administered instrument about the disposition of the completed questionnaire. In a self-administered questionnaire, instructions must be contained withn the survey instrument. Personal interviewer instructions sometimes are in a document separate from the questionnaire (a document thoroughly discussed during interviewer training) or are distinctly and clearly marked (high-lighted, printed in a colored ink, or boxed on the computer screen) on the data collection instrument itself.

Conclusion

The role of the conclusons is to leave the respondent with the impression that his or her participation has been valuable. Subsequent researchers may need this individual to participate in new studies. If every interviewer or instrument expresses appreciation for participation, cooperation in subsequent studies is more likely.