UNIVERSITY EXAMINATIONS: 2016/2017

EXAMINATION FOR THE DEGREE OF MASTER OF SCIENCE IN

DATA ANALYTICS

MDA 5203 MACHINE LEARNING

PART TIME/WEEKEND

DATE: DECEMBER, 2016 TIME: 2 HOURS

INSTRUCTIONS: Answer Question One & ANY OTHER TWO questions.

QUESTION ONE: [20 MARKS]

a) Explain the following concepts as used in neural networks:

i. Supervised learning

ii. Unsupervised learning

iii. Reinforcement learning

(3 Marks)

b) Evolutionary computation methods are based on Darwinian concept of “survival for the

fittest”. Using a suitable illustration diagram and example discuss a typical evolutionary

algorithm.

(7 Marks)

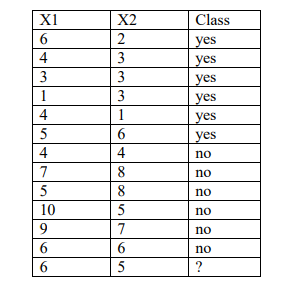

c) By first outlining the procedure for KNN decide the class for the new instance given in the

table below: Use K = 3

Explain the concept of K-Means and consequently determine the resulting clusters from

the dummy data given below: Use K=2 and let A and B be the initial point chosen. Use

Euclidean distance as the metric in this case.

QUESTION TWO: [15 MARKS]

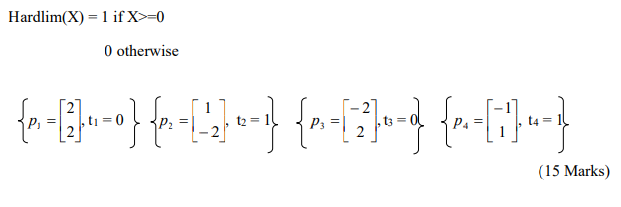

Consider a two-class decision problem represented by the input-target pairs below. Train a

perceptron network to solve this problem using the perceptron learning rule.

Let the initial weight and bias be: W = [0,0] and b = 0. Use the Hardlim function as your

activation function, defined as:

QUESTION THREE [15 MARKS]

a) Decision trees are one of the main methods that use induction as a learning algorithm.

Explain the main concept behind induction.

(3 Marks)

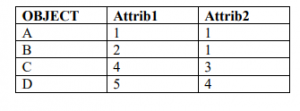

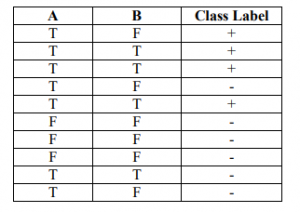

b) Consider the following data set for a binary class problem.

Calculate the information gain (based on entropy) when splitting on A and B. Which

attribute would the decision tree induction algorithm choose?

(6 Marks)

ii. Calculate the gain in the Gini index when splitting on A and B. Which attribute

would the decision tree induction algorithm choose?

(6 Marks)

QUESTION FOUR [15 MARKS]

Using Naive Bayes determine the class for a new instance X = {A=T and B = F} based on the

table given in Qn 3(b).

(15 Marks