1a) Define simulation

Simulation is the process of experimenting or using a model and noting the results which occur. In business context the process of experimenting with model usually consist of inserting different input values and observing the resulting output values. For example, in a simulation of queuing situation the input values might be the number of arrivals and/or service points and the output values might be the number and/or times in the queue.

- Advantages of simulation

- Can be applied in areas where analytical techniques are not available or would be too complex

- Constructing the model inevitably must involve management and this may enable deeper insight into a problem.

A well constructed model does enable the results of various policies and decisions be examined without any irreversible commitment being made.

Simulation is cheaper and less risky than altering the real system.

Study the behavior of a system without building it.

Results are accurate in general, compared to analytical model.

Help to find un-expected phenomenon, behavior of the system.

Easy to perform “What-If” analysis

Disadvantages of simulation

Although all models are simplifications of reality, they may still be complex and require a substantial amount of managerial and technical time.

Practical simulation inevitably requires the use of computers thus considerable amount of additional expertise is required to obtain worthwhile results from simulation exercise. This expertise is not always available

Simulation do not produce optimal results

Expensive to build a simulation model.

Expensive to conduct simulation.

Sometimes it is difficult to interpret the simulation results.

Explain Monte- Carlo method of simulation pointing out its uses in operations research.

This involve a broad class of computational algorithms that rely on repeated random sampling to obtain numerical results i.e. by running simulations many times over in order to calculate those same probabilities heuristically just like actually playing and recording your results in a real casino situation: hence the name. They are often used in physical and mathematical problems and are most suited to be applied when it is impossible to obtain a closed-form expression or infeasible to apply a deterministic algorithm.

Monte Carlo methods are mainly used in three distinct problems: optimization, numerical integration and generation of samples from a probability distribution. Monte Carlo methods are especially useful for simulating systems with many coupled degrees of freedom, disordered materials, strongly coupled solids, and cellular structures. They are used to model phenomena with significant uncertainty in inputs, such as the calculation of risk in business. They are widely used in mathematics, for example to evaluate multidimensional definite integrals with complicated boundary conditions. When Monte Carlo simulations have been applied in space exploration and oil exploration, their predictions of failures, cost overruns and schedule overruns are routinely better than human intuition or alternative “soft” methods.

a) What is dynamic programming- is multistage programming technique that uses a general approach to solve a big problem using sequential steps. It is a method for solving complex problems by breaking them down into simpler sub problems. It is applicable to problems exhibiting the properties of overlapping sub problems and optimal substructure

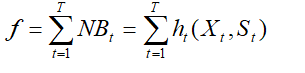

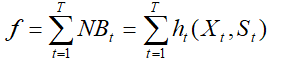

b)( i) State recursive equation of general dynamic programming problem

Recursive equations are used to solve a problem in sequence.

Considering a serial multistage problem, the objective equation is stated as follows:

(ii) State Bellman’s principles of optimality

(iii) Outline the main characteristics of Dynamic programming

Simulation is the imitation of the operation of a real-world process or system over time. The act of simulating something first requires that a model be developed; this model represents the key characteristics or behaviors of the selected physical or abstract system or process. The model represents the system itself, whereas the simulation represents the operation of the system over time.

Simulation is used in many contexts, such as simulation of technology for performance optimization, safety engineering, testing, training, education, and video games. Training simulators include flight simulators for training aircraft pilots to provide them with a lifelike experience. Simulation is also used with scientific modelling of natural systems or human systems to gain insight into their functioning.[2] Simulation can be used to show the eventual real effects of alternative conditions and courses of action. Simulation is also used when the real system cannot be engaged, because it may not be accessible, or it may be dangerous or unacceptable to engage, or it is being designed but not yet built, or it may simply not exist.[3]

Key issues in simulation include acquisition of valid source information about the relevant selection of key characteristics and behaviours, the use of simplifying approximations and assumptions within the simulation, and fidelity and validity of the simulation outcomes.

First, the advantages. Simulations can help in cases where mathematical models are not applicable, because the system to be modeled is either too complex, the system’s behavior cannot be expressed by mathematical equations, or the system involves uncertainty, i.e. stochastic elements. In either of these cases, simulation can be applied as a last resort to gain information about the system.

Simulation is especially useful if changes in an existing system are to be made, and the effects of the changes should be tested prior to implementation. Trying out the changes in the real system may not be an option, because the system does not yet exist, the costs are too high, there are too many scenarios to test, the test would take too much time (weeks, months, years), the changes are not legal, etc. In all these cases, a simulation model allows to test various scenarios in often only a couple of minutes or hours.

A disadvantage of simulation in comparison to exact mathematical methods is that simulation cannot naturally be used to find an optimal solution. There are methods which long to optimize the result, but simulation is not inherently an optimization tool. Simulation is often the only means to approach complex systems analysis. Many systems cannot be modeled with mathematical equations. Simulation is then the only way to get information at all.

Another disadvantage is that it can be quite expensive to build a simulation model. First, the process that is to be modeled must be well understood, although a simulation can often help to understand a process better. The most expensive part of creating a simulation model is the collection of data to feed the simulation, and to determine stochastic distributions (e.g. processing times, arrival rates etc.).

Another key point is to ensure the model is valid, i. e. it’s behavior mirrors that of the original (physical) system. For systems that don’t exist yet, because simulation is used for planning it, this is especially hard. Unsufficient validation and verfication of a simulation model is one of the top reasons for failing simulation projects. The consequence is false results, and this lessens the credibility of the method in general.

Advantages and Disadvantages

Main advantages of simulation include:

- Study the behavior of a system without building it.

- Results are accurate in general, compared to analytical model.

- Help to find un-expected phenomenon, behavior of the system.

- Easy to perform “What-If” analysis.

Main disadvantages of simulation include:

- Expensive to build a simulation model.

- Expensive to conduct simulation.

- Sometimes it is difficult to interpret the simulation results.

Pros

There are two big advantages to performing a simulation rather than actually building the design and testing it. The biggest of these advantages is money. Designing, building, testing, redesigning, rebuilding, retesting,… for anything can be an expensive project. Simulations take the building/rebuilding phase out of the loop by using the model already created in the design phase. Most of the time the simulation testing is cheaper and faster than performing the multiple tests of the design each time. Considering the typical university budget cheaper is usually a very good thing. In the case of an electric thruster the test must be run inside of a vacuum tank. Vacuum tanks are very expensive to buy, run, and maintain. One of the main tests of an electric thruster is the lifetime test, which means that the thruster is running pretty much constantly inside of the vacuum tank for 10,000+ hours. This is pouring money down a drain compared to the price of the simulation.

The second biggest advantage of a simulation is the level of detail that you can get from a simulation. A simulation can give you results that are not experimentally measurable with our current level of technology. Results such as surface interactions on an atomic level, flow at the exit of a micro electric thruster, or molecular flow inside of a star are not measurable by any current devices. A simulation can give these results when problems such as it’s too small to measure, the probe is too big and is skewing the results, and any instrument would turn to a gas at those temperatures come into the conversation. You can set the simulation to run for as many time steps you desire and at any level of detail you desire the only restrictions are your imagination, your programming skills, and your CPU.

Cons

There are two big disadvantages to performing a simulation as well. The first of these disadvantages is simulation errors. Any incorrect key stroke has the potential to alter the results of the simulation and give you the wrong results. Also usually we are programming using theories of the way things work not laws and theories are not often 100% correct. Provided that you can get your simulation to give you accurate results you must first run a base line to prove that it works. In order for the simulation to be accepted in the general community you have to take experimental results and simulate them. If the two data sets compare, then any simulation you do of your own design will have some credibility.

The other large disadvantage is the fact that it is a simulation. Many people do not consider what they do engineering unless they can see, hear, feel, and taste the project. If you are designing a light saber a typical engineer needs to be able to hold the light saber in their hand in order to consider the project worth his or her time. If you are capable of moving your craft into the virtual world of simulations you are no longer restricted by little things like reality. If you want to design a light saber in the virtual world it is not a problem, but in reality that is another matter all together. The virtual world is difficult to get use to the first time you use it for design, but after that the sky isn’t even your limit.

Monte Carlo methods (or Monte Carlo experiments) are a broad class of computational algorithms that rely on repeated random sampling to obtain numerical results i.e. by running simulations many times over in order to calculate those same probabilities heuristically just like actually playing and recording your results in a real casino situation: hence the name. They are often used in physical and mathematical problems and are most suited to be applied when it is impossible to obtain a closed-form expression or infeasible to apply a deterministic algorithm. Monte Carlo methods are mainly used in three distinct problems: optimization, numerical integration and generation of samples from a probability distribution.

Monte Carlo methods are especially useful for simulating systems with many coupled degrees of freedom, such as fluids, disordered materials, strongly coupled solids, and cellular structures (see cellular Potts model). They are used to model phenomena with significant uncertainty in inputs, such as the calculation of risk in business. They are widely used in mathematics, for example to evaluate multidimensional definite integrals with complicated boundary conditions. When Monte Carlo simulations have been applied in space exploration and oil exploration, their predictions of failures, cost overruns and schedule overruns are routinely better than human intuition or alternative “soft” methods.[1]

Dynamic programming

From Wikipedia, the free encyclopedia

Jump to: navigation, search

For the programming paradigm, see Dynamic programming language.

In mathematics, computer science, and economics, dynamic programming is a method for solving complex problems by breaking them down into simpler subproblems. It is applicable to problems exhibiting the properties of overlapping subproblems[1] and optimal substructure (described below). When applicable, the method takes far less time than naive methods.

The idea behind dynamic programming is quite simple. In general, to solve a given problem, we need to solve different parts of the problem (subproblems), then combine the solutions of the subproblems to reach an overall solution. Often, many of these subproblems are really the same. The dynamic programming approach seeks to solve each subproblem only once, thus reducing the number of computations: once the solution to a given subproblem has been computed, it is stored or “memo-ized“: the next time the same solution is needed, it is simply looked up. This approach is especially useful when the number of repeating subproblems grows exponentially as a function of the size of the input.

Dynamic programming algorithms are used for optimization (for example, finding the shortest path between two points, or the fastest way to multiply many matrices). A dynamic programming algorithm will examine all possible ways to solve the problem and will pick the best solution. Therefore, we can roughly think of dynamic programming as an intelligent, brute-force method that enables us to go through all possible solutions to pick the best one. If the scope of the problem is such that going through all possible solutions is possible and fast enough, dynamic programming guarantees finding the optimal solution. The alternatives are many, such as using a greedy algorithm, which picks the best possible choice “at any possible branch in the road”. While a greedy algorithm does not guarantee the optimal solution, it is faster. Fortunately, some greedy algorithms (such as minimum spanning trees) are proven to lead to the optimal solution.

For example, let’s say that you have to get from point A to point B as fast as possible, in a given city, during rush hour. A dynamic programming algorithm will look into the entire traffic report, looking into all possible combinations of roads you might take, and will only then tell you which way is the fastest. Of course, you might have to wait for a while until the algorithm finishes, and only then can you start driving. The path you will take will be the fastest one (assuming that nothing changed in the external environment). On the other hand, a greedy algorithm will start you driving immediately and will pick the road that looks the fastest at every intersection. As you can imagine, this strategy might not lead to the fastest arrival time, since you might take some “easy” streets and then find yourself hopelessly stuck in a traffic jam.

Another alternative to dynamic programming is memoization, i.e. recording intermediate results. Many problems can be solved in a basic recursive way. The problem with recursion is that it does not remember intermediate results, so they have to be calculated over and over again. A simple solution is to store intermediate results. This approach is called memoization (not “memorization”). Dynamic programming algorithms are more sophisticated than basic memoization algorithms and thus can be faster

Monte Carlo and random numbers

Monte Carlo simulation methods do not always require truly random numbers to be useful — while for some applications, such as primality testing, unpredictability is vital.[10] Many of the most useful techniques use deterministic, pseudorandom sequences, making it easy to test and re-run simulations. The only quality usually necessary to make good simulations is for the pseudo-random sequence to appear “random enough” in a certain sense.

What this means depends on the application, but typically they should pass a series of statistical tests. Testing that the numbers are uniformly distributed or follow another desired distribution when a large enough number of elements of the sequence are considered is one of the simplest, and most common ones.

Sawilowsky lists the characteristics of a high quality Monte Carlo simulation:[9]

- the (pseudo-random) number generator has certain characteristics (e.g., a long “period” before the sequence repeats)

- the (pseudo-random) number generator produces values that pass tests for randomness

- there are enough samples to ensure accurate results

- the proper sampling technique is used

- the algorithm used is valid for what is being modeled

- it simulates the phenomenon in question.

Pseudo-random number sampling algorithms are used to transform uniformly distributed pseudo-random numbers into numbers that are distributed according to a given probability distribution.

Low-discrepancy sequences are often used instead of random sampling from a space as they ensure even coverage and normally have a faster order of convergence than Monte Carlo simulations using random or pseudorandom sequences. Methods based on their use are called quasi-Monte Carlo methods.

Monte Carlo simulation versus “what if” scenarios

There are ways of using probabilities that are definitely not Monte Carlo simulations — for example, deterministic modeling using single-point estimates. Each uncertain variable within a model is assigned a “best guess” estimate. Scenarios (such as best, worst, or most likely case) for each input variable are chosen and the results recorded.[11]

By contrast, Monte Carlo simulations sample probability distribution for each variable to produce hundreds or thousands of possible outcomes. The results are analyzed to get probabilities of different outcomes occurring.[12] For example, a comparison of a spreadsheet cost construction model run using traditional “what if” scenarios, and then run again with Monte Carlo simulation and Triangular probability distributions shows that the Monte Carlo analysis has a narrower range than the “what if” analysis.[examples needed] This is because the “what if” analysis gives equal weight to all scenarios (see quantifying uncertainty in corporate finance), while Monte Carlo method hardly samples in the very low probability regions. The samples in such regions are called “rare events